Create an extension with basic operators

In this tutorial, you will build a Python extension with basic set of operators. By the end of the tutorial, you will be able to do the following.

- Create different operators with different parameter types

- Creating different input and output ports for the operators

- how to log messages in the Log console of AI Studio

To start with the tutorial, you will need to set up the conda environment and install Altair AI Studio 2025.1 with Altair Units license.

Setup a new conda environment

Let's start by creating a new conda environment that you will use in the development machine.

conda create -n sample-environment python=3.12

conda activate sample-environment

conda install altair-aitools-devkit -c conda-forge

Create a new project

Next, create a blank project for the extension.

mkdir python-extension-sample

cd python-extension-sample

create-extension

- The

create-extensioncommand will ask to create a blank or a sample extension. Enter blank - Enter the name of the extension: python-extension-sample

- Enter the version number: let's keep it as default, hit enter.

- Enter the author name: You can enter your name or choose blank.

Once created, open the project in VS Code (or your favorite IDE).

Extension Configuration

By default, the extension.toml file will be available with all the details. Open the file and you should see the following info

[extension]

name = "python-extension-sample"

version = "0.0.1"

authors = [""]

namespace = "pysa"

module = "python-extension-sample"

Next, let's start adding some basic operators.

Create operators

First, create a new directory called

basic_operatorsunder thepython-extension-samplefolder.Second, add the

__init__.pyfile under thebasic_operatorsfolder.And, now for the adding our operator functions, create a new file

parameter_types.pyunder thebasic_operatorsfolder.

Next, we will add some Python functions.

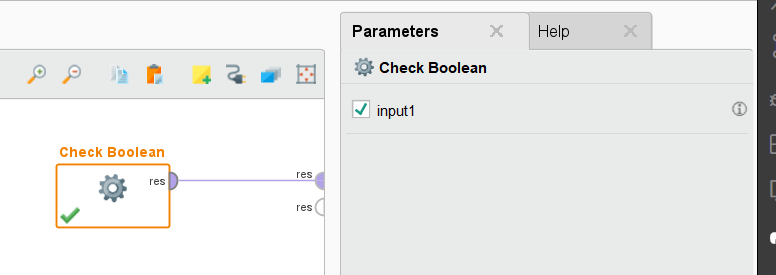

Boolean parameter

To add a boolean parameter, you can define a function with the bool datatype in the argument.

- Add a new function called

check_booland create a new parameterinput1with the bool datatype. - Add

DataFrameas the return type.

from pandas import DataFrame

def check_boolean(input1: bool) -> DataFrame:

"""This Operator checks if the given value is a boolean

Args:

input1 (bool): true/false boolean value from the user

Returns:

DataFrame: input to studio O/P - dataframe with true if boolean is presented as the input

"""

return DataFrame([isinstance(input1, bool)])

Build extension

Before adding new operators, lets check if we can build the extension and see the operator in Altair AI Studio.

- Add a new

__init__.pyfile under thepython-extension-samplesfolder. - Import the functions from the

basic_operators.pyfile.

from .basic_operators.parameter_types import *

- Add the operator definition in the

extension.tomlfile withgroup_keyasbasic.parameter_types.

[operators.check_boolean]

group_key = "basic.parameter_types"

- Open the terminal and build the extension using the

build-extensioncommand.

On Windows

build-extension -o "%USERPROFILE%\.AltairRapidMiner\AI Studio\shared\extensions\python-extensions" .

On macOS/linux

build-extension -o "~\.AltairRapidMiner\AI Studio\shared\extensions\python-extensions" .

- Once build, open Altair AI Studio and from the menu, go to Extensions -> About Installed Python Extensions.

- Wait for the Python Samples extension to be

Ready.

Now, let's add the operator to the canvas and run the process.

- Run the process, and see the output.

Let's continue adding more operators.

Numeric parameters

We will add functions that defines an integer and a float parameter.

- Add a new function called

check_integerand an argumentinput1withintdatatype. - Add

DataFrameas the return type.

def check_integer(input1: int) -> DataFrame:

"""This Operator checks if the given value is an integer

Args:

input1 (int): any integer value from the user

Returns:

DataFrame: input to studio O/P - dataframe with true if integer is presented as the input

"""

return DataFrame([isinstance(input1, int)])

- Add the operator configuration to the extension.toml file.

[operators.check_integer]

group_key = "basic.parameter_types"

- Similarly, add a new function to

check_floatto check if the parameter is float. - Add

DataFrameas the return type.

def check_float(input1: float) -> DataFrame:

"""This Operator checks if the given value is a float

Args:

input1 (float): any float value from the user

Returns:

DataFrame: input to studio O/P - dataframe with true if float is presented as the input

"""

return DataFrame([isinstance(input1, float)])

- Add the operator configuration to the extension.toml file.

[operators.check_float]

group_key = "basic.parameter_types"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

String parameter

- Add a new function with the argument of string type.

def check_string(input1: str) -> DataFrame:

"""This Operator checks if the given value is a string

Args:

input1 (str): any string value from the user

Returns:

DataFrame: input to studio O/P - dataframe with true if string is presented as the input

"""

return DataFrame([isinstance(input1, str)])

- Add the operator configuration to the extension.toml file.

[operators.check_string]

group_key = "basic.parameter_types"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

Category parameter

You can define the parameter with fixed set of constants using Python Enums. Create a new class that extends Enum having three values and a function check_enum to use it as the parameter.

In the function, we make use of the sklearn preprocessing function and apply them to the input dataframe.

Note: use conda/pip to install the sklearn in the conda environment.

from enum import Enum

from sklearn.preprocessing import MaxAbsScaler, MinMaxScaler, StandardScaler

class Scaler(Enum):

"""Supported normalization methods."""

MAX_ABSOLUTE = MaxAbsScaler

MIN_MAX = MinMaxScaler

STANDARD = StandardScaler

def check_enum(input1: Scaler) -> DataFrame:

"""This Operator checks if the given value is an enum

Args:

input1 (Scaler): any enum value from the user

Returns:

DataFrame: input to studio O/P - dataframe with true if enum is presented as the input

"""

return DataFrame([isinstance(input1, Scaler)])

- Add the operator configuration to the extension.toml file.

[operators.check_enum]

group_key = "basic.parameter_types"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

In the following section, we will add operators with different inputs and output ports.

- Create a new file

port_combination.pyunderbasic_operatorsfolder. - To use all the operators that we will define in this tutorial, lets add import them in the

__init__.pyfile in thepython-sample-extensionfolder.

from .basic_operators.port_combination import *

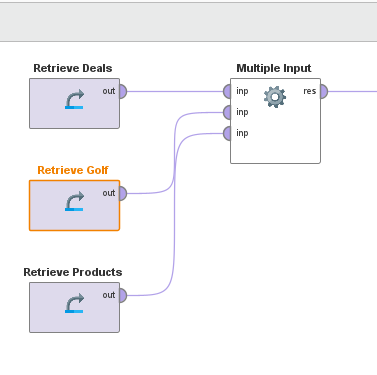

Multiple Input ports

In this function, let's add three input dataframes and return the combined dataframe.

- Create a new function called

multiple_inputin theport_combination.pyfile.

import pandas as pd

from pandas import DataFrame

def multiple_input(input1: DataFrame, input2: DataFrame, input3: DataFrame) -> DataFrame:

"""This operator accepts multiple inputs and combines them into one dataframe

Returns:

DataFrame: combined dataframe

"""

return pd.concat([input1, input2, input3], axis=1)

- Add the operator configuration to the extension.toml file.

[operators.multiple_input]

group_key = "basic.port_combinations"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

When built, the operator will be able to accept three dataframes as input and will return combined dataframe as the result.

Next, lets add an operator with different outputs.

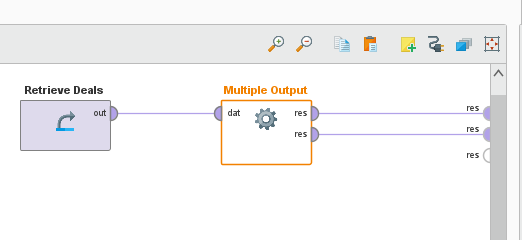

Multiple Output ports

To add multiple output for an operator, you can use tuple to combine them.

- Add a new function

multiple_output - For return, combine different dataframes using tuple.

def multiple_output(data: DataFrame) -> tuple[DataFrame, DataFrame]:

"""This Operator splits the given input into multiple outputs by splitting its rows randomly

Args:

data (DataFrame): Dataframe to be split

Returns:

tuple[DataFrame,DataFrame]: splits the given data rows randomly

"""

len = data.shape[0]

output1 = data.iloc[:, :len]

output2 = data.iloc[:, len:]

return (output1, output2)

- Add the operator configuration to the extension.toml file.

[operators.multiple_output]

group_key = "basic.port_combinations"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

Once build, the operator will look like shown in the image below.

Apart from having multiple outputs, the operator can also provide list of outputs as a collection. In the next step, we will create a function that creates a collection of output.

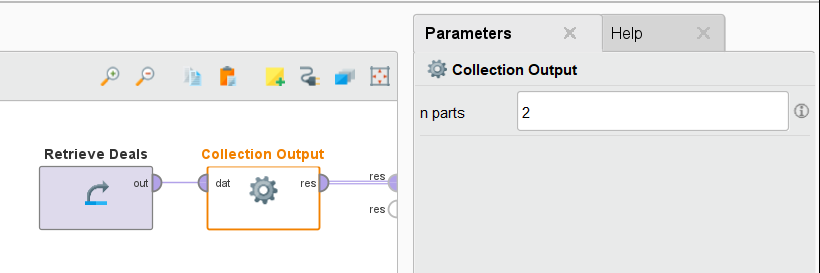

Output Collection

- Add a new function

collection_outputthat has two arguments - a dataframe and an integer. - The return value for the function will be a

listof dataframe.

def collection_output(data: DataFrame, n_parts: int = 2) -> list[DataFrame]:

"""This Operator splits the given input into multiple outputs by splitting its rows

Args:

data (DataFrame): Dataframe to be split

n_parts (int): number of parts to split the data into

Returns:

list[DataFrame]: splits the given data rows

"""

len = data.shape[0]

parts = []

for i in range(n_parts):

parts.append(data.iloc[i * len // n_parts : (i + 1) * len // n_parts, :])

return parts

- Add the operator configuration to the extension.toml file.

[operators.collection_output]

group_key = "basic.port_combinations"

Note: You can build extension after adding functions. Follow the steps mentioned in the previous section.

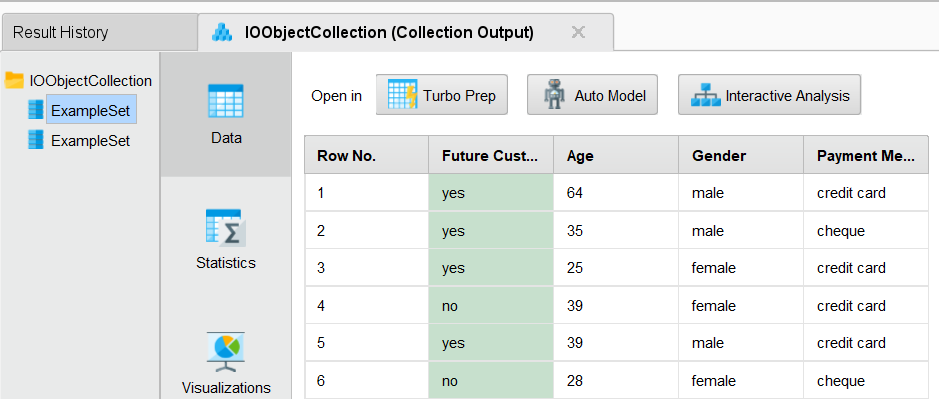

- Create a new process and add collection output operator to the canvas.

- When run, the output will be the list of collection. In this case, we will see two example set in the Result view of the Altair AI Studio.

Adding Logging

Logging in Python Extensions is managed by Python's standard logging interface. The default configuration is sufficient for optimal operation. Additional configuration changes are neither necessary nor advised, as they may lead to unintended consequences.

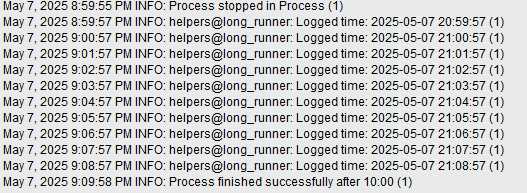

For this tutorial, we will create a long-running function, that sleeps for 1 second and logs the message.

- Create a new file

helpers.pyunder thepython-extension-sampleand add new functonlong_runner. - Add the following code.

from pandas import DataFrame

import time

from datetime import datetime

import logging

def long_runner(input1: int) -> DataFrame:

""" This Operator runs for a long time

Args:

input1 (int): any integer value from the user which represents the time in minutes

Returns:

DataFrame: input to studio O/P - dataframe with time column which logs the time every minute

"""

times = []

for _ in range(input1):

current_time = datetime.now().strftime("%Y-%m-%d %H:%M:%S")

times.append(current_time)

logging.info(f"Logged time: {current_time}")

for _ in range(60):

time.sleep(1)

return DataFrame(times, columns=["Logged Time"])

- To use the function as the operator, import it in the

__init__.pyfile under thepython-extension-samplefolder.

from .basic_operators.parameter_types import *

from .basic_operators.port_combination import *

from .helpers import *

- Add the operator configuration in the

extension.tomlfile and build the extension again.

[operators.long_runner]

group_key = "basic.long_runner"

- Build the extension and reload it in Altair AI Studio.

- Create a new process, add the operator to it and run the process.

- Check the logs from the Log view of the Altair AI Studio.

Final thoughts

This is the end of the tutorial for creating basic set of operators and parameters with the altair-aitools-devkit package.

In this tutorial, we learned how to add different parameters like boolean, integer, float, string as well as saw how to define different input and output combinations.

Want to move on to create more advanced set of operators? Go to the next tutorial on creating advanced operators.

If you have any questions or feedback, feel free to post query on our community page.