You are viewing the RapidMiner Go documentation for version 9.7 - Check here for latest version

Multiclass classification

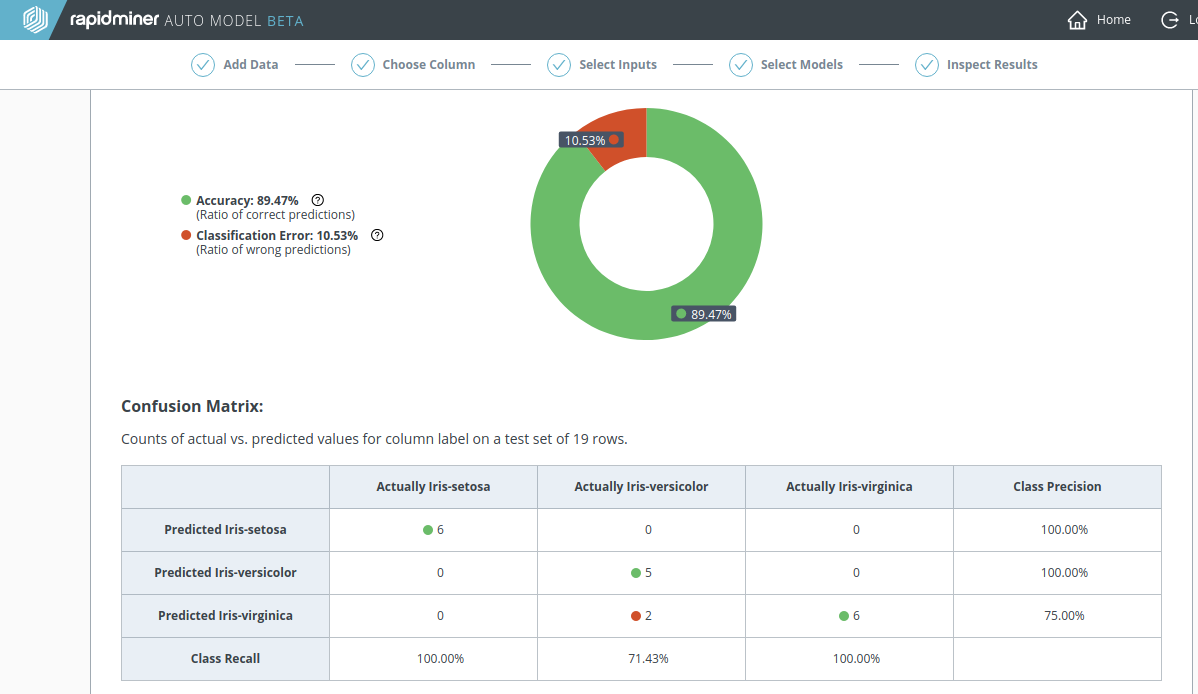

Performance metrics

We have already discussed binary classification at some length, and multiclass classification is similar, so here we will concentrate on the differences. The primary difference is that there are not just two possible outcomes, but three or more.

Assuming that all of the possible outcomes are equally interesting to you, with no special emphasis on any of them, it's reasonable to choose Accuracy as your performance metric, and to prefer the model with the highest Accuracy.

When there are N possible outcomes, the confusion matrix has N x N elements. The correct predictions are on the diagonal, and incorrect predictions are off-diagonal. Although the summary performance table in the Model Comparison only mentions Accuracy and Classification Error, you can still find the analogues of Precision and Recall by looking at the Performance tab for each individual model. Precision is computed for each row; Recall is computed for each column.

| Actually A | Actually B | Actually C | Precision | |

|---|---|---|---|---|

| Predicted A | True A | True A / Predicted A | ||

| Predicted B | True B | True B / Predicted B | ||

| Predicted C | True C | True C / Predicted C | ||

| Recall | True A / Actually A | True B / Actually B | True C / Actually C |

In the example below, a multiclass classification problem with three possible outcomes, the model made two wrong predictions, indicated by a red mark in the confusion matrix, when applied to the test set. Consequently, the Recall for the second column (5/7) and the Precision for the third row (6/8) are less than 100%.